Published on: 2 November, 2025

Scholars, researchers, professionals, and institutions blindly believe the authenticity and credibility of academic journals listed in the FT 50 journals list, Clarivate’s Journal Citations Reports (JCR), and Scopus. Many institutions use these indexing/ranking services to evaluate the performance of their faculty members and make decisions about induction of new faculty members, promotion, and research funding allocation. Considering an information source as credible and reputed doesn’t mean it’s flawless. Seriously, even the “big names” mess up or come with their own baggage. You've got to keep an eye out for their blind spots, biases, or just plain gaps in info. If you rely solely on one perspective, you risk overlooking the broader picture. So yeah, poke around, question stuff, and don’t let credibility make you lazy. That’s how you actually get a grip on what’s real. Today, I will present five instances from highly credible journals and discuss surprising blunders their editors committed.

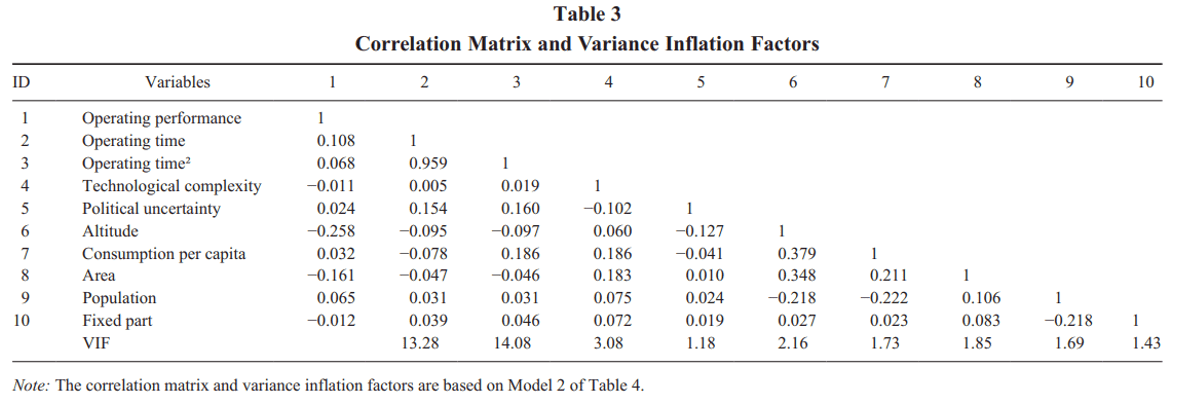

Journal of Management (JOM) is a heavyweight outlet in the domain of management sciences with Clarivate’s SSCI Impact Factor for 2024: 9 and a Scopus Q1 ranking. I am going to report a crazy mistake in the article titled "Comparing Learning-by-Doing Between In-House Provision and External Contracting in Public Service Provision" published on 26 June 2025. In this article the point of attraction for my readers would be the correlation matrix reported by the authors (Table 3, p. 17). Can my readers find a major issue in this table? Stop reading my critique for now, observe the table, and identify a big blunder, if you can.

Ohh! You can’t find it. For those who have found it, you are right! The table was published without mentioning statistical significances (p-values) of the relationships among variables (the values are not reported in text either). Publishing a correlation matrix without providing the corresponding p-values (significance levels) is generally considered wrong, unprofessional, and unethical in scientific and professional contexts. Due to this major problem, a person with the knowledge of statistics would not read further because he would have no idea whether the correlations among variables were significant or not. Without the significance levels, the interpretation of relationships would be misleading and would lack statistical rigor. Omitting p-values means authors are not providing the necessary statistical evidence to support their claims about relationships between variables. Scientific transparency requires providing essential information for readers to understand, evaluate, and potentially reproduce the study findings. P-values are a critical piece of information, and without them, it’s impossible for readers, reviewers, or other researchers to critically assess the reliability of the reported correlations. They can’t determine if the findings are robust or merely artifacts of the specific sample.

Do you really expect such a blunder to be committed by the editors of JOM? Not really. But the blunder is live!

British Journal of Management (BJM) is an elite journal published by the British Academy of Management. The journal has a Clarivate SSCI Impact Factor of 5.7 for 2024 and a Scopus Q1 rating. The journal published the article under criticism titled "HRM Algorithms: Moderating the Relationship between Chaotic Markets and Strategic Renewal" on 3 July 2024. In this article the authors construct their study's conceptual model using 4 variables. Besides many other issues, the article was published without reporting the standard correlation matrix. Reporting a correlation matrix along with p-values is essential when testing hypotheses using regression, Covariance-Based Structural Equation Modeling (CB-SEM), or Partial Least Squares Path Modeling (PLS-PM) for several critical reasons. Before diving into a complex regression model, a correlation matrix gives us a quick overview of the direct, pairwise linear relationships between all study independent and dependent variables. This helps us understand the basic data structure of the study. It allows readers to see which independent variables have a strong relationship with the dependent variable and in which direction (positive or negative). This can inform researchers’ hypothesis development and model specification. Another most important task in any empirical work is to detect the presence of multicollinearity. It occurs when two or more independent variables in a regression model are highly correlated with each other. Providing the correlation matrix, along with means and standard deviations, allows other researchers to understand the fundamental characteristics of study data and, in principle, to attempt to replicate findings or build upon the work. Reporting the correlation matrix is a cornerstone of good scientific research reporting.

It is surprising to learn that either correlation matrix is not a very important component of an empirical research paper for the editors of BJM or they don’t realize its significance, therefore, they allowed publication of this article.

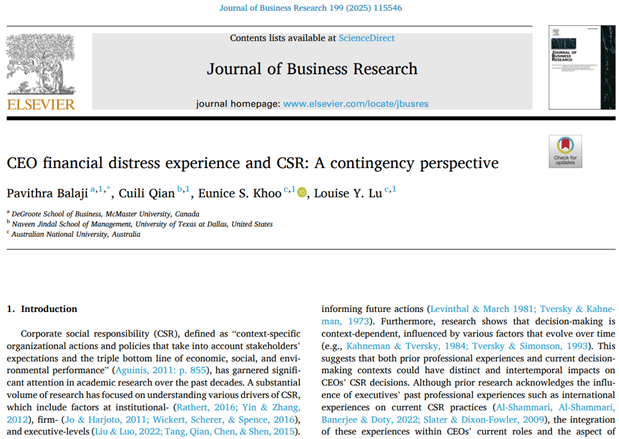

Journal of Business Research (JBR) is a so-called well-respected and reputed publication of Elsevier. According to the 2025 Journal Citations Report (JCR) by Clarivate, the journal’s Impact Factor for 2024 is 9.8, while Scopus assigned it a Q1 rank. I invite my readers to look at the article "CEO financial distress experience and CSR: A contingency perspective" published in Volume 199, October 2025.

What did you observe? Yes, you are right! The “Abstract” is missing. Seriously, it would be highly unusual and generally considered a significant red flag for a truly (so-called) “reputed and high impact” journal to publish a standard research article without an abstract in today’s academic landscape. This is a significant and serious overlook. We should call it a blatant blunder committed by JBR editors.

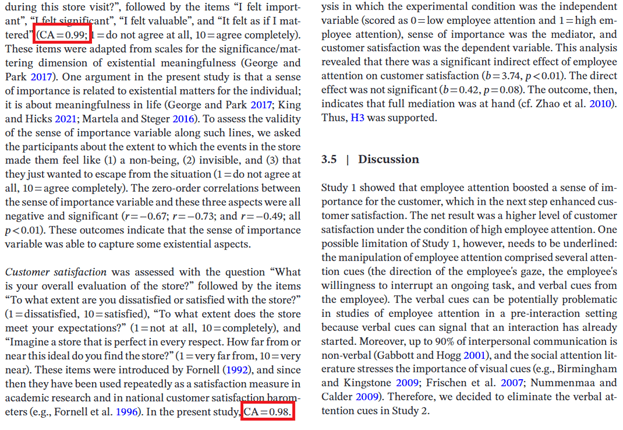

Generally, statisticians advise their students to achieve a Cronbach's alpha value equal to or greater than 0.70 to show good internal consistency of their study scales. Unfortunately, professors, scholars, and editors, without having in-depth knowledge of statistics and psychometrics, follow this “rule of thumb” without understanding the maximum values that will only deter study findings. A similar instance is found in a study titled "Receiving Employee Attention on the Floor of the Store and Its Effects on Customer Satisfaction" which was published in the Journal of Consumer Behaviour (SSCI Impact Factor 2024: 5.2) on 21 March 2025.

In Study 1, sub-section: Measurement (p. 1660), the authors reported a Cronbach's alpha value of 0.99 for the Sense of Importance scale and 0.98 for the Customer Satisfaction scale.

Statisticians know that such high values indicate item redundancy or a too-narrow focus of study scales. Scholars might want to review the items to see if any are essentially duplicates or if the scale could be improved by adding items that capture different facets of the construct. "Panayides (2013)" mentions in the concluding remarks of his study (p. 695):

“Second, alpha “should not be too high (over 0.90 or so). Higher values may reflect unnecessary duplication of content across items and point more to redundancy than to homogeneity” (Streiner, 2003, p. 102). Furthermore, higher values may reflect a narrow coverage of the construct which jeopardizes the precision of a large proportion of person measures.”

I wonder how an editor could overlook such a stern issue and allow publication of such a study without taking any action to rectify this issue. Only a person without statistical acumen can allow this.

Lastly, I shall present a few articles published with grammatically or contextually incorrect titles. In the article titled "Developing process and product innovation through internal and external knowledge sources in manufacturing Malaysian firms: the role of absorptive capacity" published in Business Process Management Journal (Clarivate SSCI Impact Factor 2024: 5.8), the authors tried to “manufacture Malaysian firms.” What else can I say?

Look at the title of the next article, "Uncovering the Muslim Leisure Tourists’ Motivation to Travel Domestically – Do Gender and Generation Matters?" published in Tourism and Hospitality Management, a journal published by the University of Rijeka, Croatia (Clarivate SSCI Impact Factor 2024: 1.6; Scopus Q3). Do you find any major grammatical errors? Yes, the compound subject is plural, and the title lacks subject-verb agreement. Do you really want to see your study published without any orthographic editing and copyediting?

The next instance of editorial failure was published in World Review of Entrepreneurship, Management and Sust. Development by Inderscience, which is a Scopus Q4 journal. The title of the article "Entrepreneur proactiveness and customer value: the moderating role of innovation and market orientation" reflects that the authors tested “moderation.” However, the theoretical framework presented on page 194 doesn’t present moderation at all. It’s a “parallel mediation” framework. Do my readers see that the editors of this journal were not even able to correct the title of this study?

I know research geeks and sober academics will be saddened after reading this critique because they can’t expect such stern bloomers from the editors of the above-mentioned so-called high-quality business journals. This article doesn’t talk about predatory journals, but the mentioned editorial behavior can definitely be called predatory behavior. I would suggest the researchers, editors, and reviewers take note of "Anwar’s (2022)" suggestions:

“…researchers, teachers, reviewers, and editors should give up the legendary conceptions and evaluation criteria by attending available resources and authority figures in related fields (p. 95).”

“If an academic figure is interested in or has proposed any theoretical or religious concept (such as Din, 1989), new construct, algorithm, routine, statistical method, etc. he/she should seek advice and approval from the authority figure(s) in the related domain. This advice and approval should not be confused with the doctoral training process or peer review system (p. 95).”

According to Anwar (2022), “Authority figures in academia comprise only those elements of the academic communities who not only have certitude about something but also have a right to that certitude (p. 95).” I argue that editors allowing publication of erroneous articles don’t qualify themselves to be called “authority figures.” They should get themselves trained by attending authority figures in their domains. In addition, reviewers and authors should also attend authority figures to learn appropriate research reporting, statistical methods, theory development, etc. Authors should send their manuscript to authority figures in their domains and seek their advice before submitting their articles to journals.

Don’t hesitate to share your thoughts and experiences with similar journals.

"Scholarly Criticism" is launched to serve as a watchdog on Business Research published in so-called Clarivate/Scopus indexed high quality Business Journals. It has been observed that, currently, this domain is empty and no one is serving to keep authors and publishers of journals on the right track who are conducting and publishing erroneous Business Research. To fill this gap, our organization serves as a key stakeholder of Business Research Publishing activities.

For invited lectures, trainings, interviews, and seminars, "Scholarly Criticism" can be contacted at Attention-Required@proton.me

Disclaimer: The content published on this website is for educational and informational purposes only. We are not against authors or journals but we only strive to highlight unethical and unscientific research reporting and publishing practices. We hope our efforts will significantly contribute to improving the quality control applied by Business Journals.